The Assumption Surface: Why Agent-Driven Capital Favors Transparent Protocols

AI agents can't model what they can't observe. We introduce the assumption surface: a framework for measuring protocol opacity, and why minimizing it will determine which protocols capture agent-driven capital.

Most DeFi protocols are only partially observable. As AI agents become serious participants in onchain capital allocation, the protocols that are most transparent and machine-parseable will capture a disproportionate share of agent-driven activity. The goal of this post is to introduce a concept I'm calling the assumption surface, and argue that minimizing it is a precondition for the kind of protocol-specific intelligence that will actually work.

From protocol-specific intelligence to protocol-specific observability

In our previous research, we argued that effective AI agents in DeFi need protocol-specific training rather than generic prediction. The core thesis: agents trained in sandbox environments that replicate a protocol's actual mechanics (fee models, liquidation logic, incentive structures, state transitions) develop more robust strategies than agents trained on abstracted market signals. We proposed Mixture of Experts architectures to integrate specialized protocol knowledge, and drew parallels with evolutionary AI research where complex behaviors emerge from interaction with rich, rule-based environments.

That was the demand side: what agents need in order to operate effectively. This post addresses the supply side: what protocols need to provide.

The connection is direct: if agents need to internalize protocol mechanics, then the protocol has to make those mechanics observable. This is not a given. The degree to which DeFi protocols actually expose their operational logic varies enormously, and the gap between "technically open source" and "genuinely observable to an autonomous system" is much wider than most people realize.

The agentic readability precedent

Before getting into DeFi specifically, it's worth noting that this dynamic is already playing out in e-commerce.

OpenAI's Agentic Commerce Protocol and Google's Universal Commerce Protocol converge on the same principle: in a world with increasing agent participation, machine-readability determines selection. AI agents query data feeds, parse product attributes, and evaluate metadata, making merchants whose data isn't structured for agent consumption effectively invisible.

Bain & Company estimates that 30% to 45% of US consumers already use AI to research and compare products. McKinsey's analysis of agentic commerce projects that AI agents could mediate $3 trillion to $5 trillion in global consumer commerce by 2030, and argues that designing the "agent experience" is becoming as important as designing the customer experience.

The translation to DeFi is pretty direct. As autonomous agents start executing trades, managing positions and rebalancing portfolios, the protocols whose behavior is most observable will capture the most agent-driven capital. Instead of product catalogs it's financial protocols, but the mechanism is the same.

Beyond open source: the assumption surface

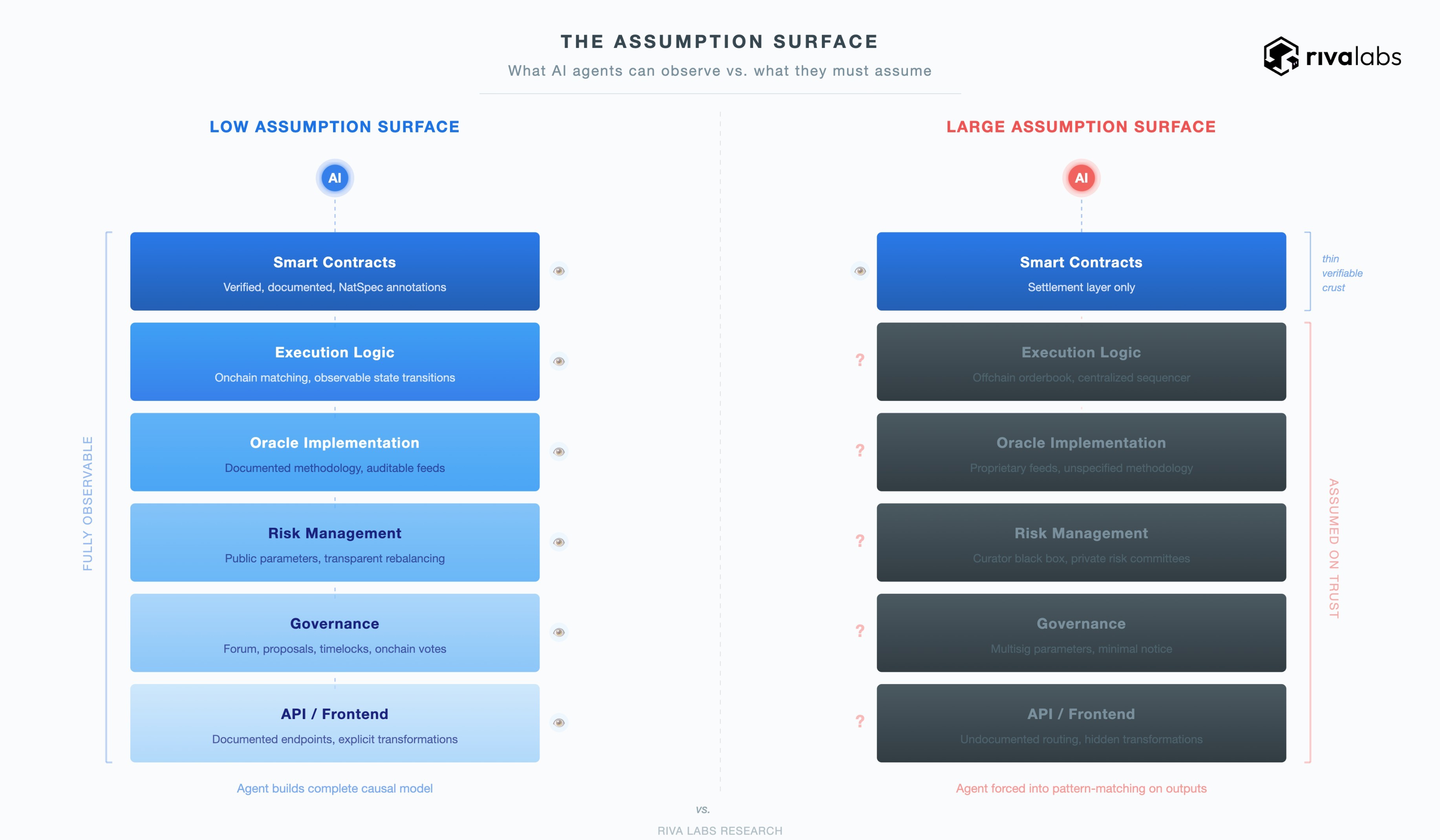

Open source is necessary but insufficient for agent compatibility. The variable that actually matters is what I'm calling the assumption surface: the total set of assumptions an agent must make about a protocol's behavior because components are unobservable, undocumented, or not formally verifiable.

A protocol's actual behavior is determined by its entire operational stack, not just its smart contracts. For many modern DeFi protocols, the onchain contracts are really just the settlement layer: a thin verifiable crust on top of a much larger body of logic that may be partially or entirely opaque. Every opaque component forces the agent to substitute an assumption for a causal understanding and each assumption is a potential failure mode.

More precisely: the assumption surface is a function of how much of a protocol's behavior can be derived from publicly observable, machine-readable sources (verified contracts, event emissions, documentation, governance records, API specs) versus how much must be inferred, guessed, or taken on trust.

A fully transparent, onchain, well-documented protocol has a near-zero assumption surface. An agent interacting with it can, in principle, build a complete behavioral model. A protocol with lots of offchain execution, proprietary risk management, opaque oracle implementations, and undocumented backend logic has a large assumption surface. The agent isn't just reasoning under uncertainty about market conditions (which is expected), but about the system's own behavior. That's a fundamentally different kind of uncertainty, and a much more dangerous one.

Why does this matter? Because an agent that mismodels market dynamics loses money on a trade. An agent that mismodels protocol mechanics can be liquidated, drained, or locked into positions it never intended to hold.

The layers of opacity

The assumption surface isn't one thing. It emerges from specific layers of a protocol's operational stack, each introducing its own category of unobservable behavior.

Offchain order matching and sequencing

A growing number of DeFi protocols, especially in perpetual futures, have significant offchain execution components. Protocols with offchain orderbooks and centralized sequencers handle matching, priority assignment, and liquidation sequencing in systems whose logic can't be derived from onchain data.

Consider a perpetual futures protocol running its own L1 with a centralized sequencer. The matching engine's behavior (how it prioritizes orders, sequences liquidations under stress, what latency characteristics it exhibits) can be entirely opaque to an onchain observer. An AI agent trained on the protocol's smart contracts would understand settlement mechanics, but would be blind to execution dynamics. And in perpetual markets, execution dynamics are precisely where edge exists. The agent would be operating on assumptions about fill behavior, liquidation priority, and sequencer fairness that it literally cannot verify.

Compare this to a fully onchain execution model, where every state transition is observable in the contracts and transaction history. The agent has access to a complete behavioral record. It can learn not just what the protocol does normally, but how it behaves under stress, because every historical stress event is fully recorded.

To be clear, the point isn't that offchain components are inherently worse as products. They often deliver better execution speed, deeper liquidity, and superior UX. The point is that they are opaque to the agent, and that opacity directly constrains the quality of the behavioral model the agent can build.

Proprietary risk management layers

A second kind of opacity arises when protocols delegate critical decisions to external parties whose reasoning isn't public. The clearest example is the curator model.

In protocols that use curators or vault operators, the smart contracts define the lending or allocation primitives, but the actual risk parameters (which assets get accepted, what LTV ratios get set, how allocations get rebalanced, how the system responds to market shocks) are determined by external actors. Each curator's strategy can be a black box from the agent's perspective.

An agent interacting with a curated vault doesn't know why a given allocation was chosen, when the curator will rebalance, or how the curator will respond to a stress scenario. It can observe outcomes onchain after the fact, but it can't model the decision process itself. This pushes the agent into reactive pattern-matching rather than proactive mechanism-aware reasoning, which is exactly the limitation we identified in our previous research.

The same dynamic applies to any protocol where operational decisions are made by entities whose logic isn't publicly documented: multisig-controlled parameter updates, private risk committees, proprietary rebalancing algorithms operating through privileged backend access.

Oracle implementations

Oracle behavior is one of the most consequential and least discussed sources of assumption surface. For any agent reasoning about liquidation risk (a core use case for protocol-specific intelligence), the oracle's behavior under stress is a critical variable.

Two lending protocols can both claim to "use Chainlink" while implementing it in materially different ways: different deviation thresholds, heartbeat configurations, staleness checks, grace periods, fallback mechanisms. Some of this is visible in the contracts, but the oracle infrastructure itself (how decentralized oracle network nodes aggregate data, what sources they use, how they handle outliers, their internal consensus mechanism) is partially opaque.

The situation gets worse for protocols using proprietary oracles, custom TWAP implementations, or oracle solutions where the methodology isn't fully specified. The agent doesn't know what price it will get under unusual market conditions, or when the oracle might diverge from actual market reality. For an agent managing positions near liquidation thresholds, this uncertainty is directly consequential.

Frontend, backend, and API behavior

A frequently overlooked dimension: how does the protocol's frontend construct transactions? What default slippage parameters does it apply? Does the backend route through private mempools or public ones? Are there backend services that preprocess or filter data before it reaches the user? Do APIs have rate limits, delays, or transformations that affect the information available to programmatic clients?

An agent interacting with a protocol's smart contracts directly may observe different outcomes than one going through the frontend or API. This discrepancy is often undocumented. For agents relying on API endpoints for real-time data (state queries, position monitoring, event subscriptions), any undocumented filtering, delay, or transformation introduces assumptions about data accuracy and completeness.

Governance and parameter mutability

Even fully onchain, open-source protocols face dynamic uncertainty through governance. Parameter changes (interest rate curve adjustments, collateral factor updates, fee modifications, liquidation penalty revisions) can alter protocol behavior in ways that invalidate an agent's learned model. This is inherent and unavoidable.

What varies is the transparency of the governance process itself. Some protocols have structured, public procedures: forum discussions, formal proposals, defined voting periods, timelock delays. An agent monitoring these channels can anticipate parameter changes and adjust. Other protocols rely on multisig-controlled parameters that can be modified with minimal public notice. In those cases, the agent has no way to see changes coming.

You can think of this as the distinction between managed uncertainty (changes are dynamic but predictable, because the process is transparent) and unmanaged uncertainty (changes can happen at any time, because the process is opaque).

The ceiling of protocol-specific intelligence

The analysis above leads to a structural conclusion that connects directly to our previous work. If the value of protocol-specific intelligence lies in agents learning actual protocol mechanics rather than abstract market signals, then the effectiveness of that approach has a hard ceiling set by the protocol's transparency.

For a protocol with a near-zero assumption surface, the ceiling is very high. An agent can internalize the complete causal model: every state transition, every fee calculation, every liquidation trigger, every governance-initiated parameter change. The sandbox training environments we proposed earlier can be built with high fidelity, because the protocol's behavior is fully specified.

For a protocol with a large assumption surface, the ceiling is fundamentally lower. No amount of training sophistication can teach an agent to reason about mechanics it can't observe. The opaque components force the agent back into inferring behavior from outputs: correlation-based pattern matching on historical data. This is exactly the generic prediction approach our previous research argued against.

So the assumption surface doesn't just affect the cost of building protocol-specific agents. It determines the maximum effectiveness of protocol-specific intelligence for that protocol.

The transparency flywheel

If protocol-specific intelligence is bounded by observability, and if AI agents are becoming meaningful participants in DeFi capital allocation, you get a self-reinforcing dynamic.

A protocol with a minimal assumption surface enables higher-quality agent training, which produces more effective agents for that protocol. More effective agents attract more agent-driven volume and TVL. More onchain activity generates more training data, further improving agent quality. The protocol deepens its position as the preferred substrate for autonomous DeFi activity.

This is a platform dynamic. Just as software ecosystems accrue value to the platforms that are easiest to build on, agent-driven capital accrues to the protocols whose behavior is most completely modelable. The parallel with agentic commerce holds: merchants optimizing for this emerging behavior capture agent-mediated transactions, while DeFi protocols minimizing their assumption surface capture agent-mediated capital flows.

Over time, this creates selection pressure on protocol design. Teams building new protocols face an explicit tradeoff: add offchain components for better performance and UX (increasing the assumption surface), or keep everything observable and well-documented (maximizing agent compatibility). Both sides have legitimate engineering justifications. But the growing share of agent-driven DeFi activity adds a new, substantial weight to the transparency side, and that weight will increase as agent capabilities improve.

This doesn't mean that protocols with large assumption surfaces will disappear. It means they'll face a structural disadvantage in capturing the fastest growing segment of DeFi participation. Protocols that treat observability as something they care about will attract a compounding share of autonomous capital, while those that don't will rely increasingly on human users alone.

Counterarguments

"AI can reverse-engineer any onchain code"

True for the onchain layer. AI models are demonstrably capable of analyzing smart contract bytecode, and recent research has shown frontier models successfully identifying and exploiting vulnerabilities in deployed contracts. But this argument only applies to what exists onchain. An offchain matching engine, a curator's proprietary risk model, a backend routing algorithm, an undocumented API transformation: these have no onchain representation to analyze. "Everything is public on EVM chains" is accurate at the bytecode level but misleading at the operational level. For many modern DeFi protocols, the onchain contracts represent a fraction of the total operational logic.

"Transparency helps adversarial agents too"

This is correct, and important to acknowledge. The same observability that enables benign agents to build accurate models also enables adversarial agents to find attack vectors. Anthropic's SCONE-bench demonstrated that frontier AI models can exploit smart contract vulnerabilities at increasing rates, with estimated exploit revenue roughly doubling every 1.3 months.

However, security through obscurity has never been a sustainable strategy in software engineering. Open-source software with active audit cultures, formal verification, and bug bounty programs has, over decades of evidence, demonstrated better security outcomes than closed-source alternatives. The appropriate response to transparency enabled attack surfaces is not less transparency but more robustness.

There's also a more concrete version of this objection: MEV. On a transparent protocol, searchers can more efficiently identify extractable value. But MEV extraction operates primarily at the mempool level (pending transactions), not the protocol source level. The ecosystem already recognizes this: Flashbots Protect, encrypted mempools, order flow auctions, batch auctions are all defenses that protect transaction privacy while assuming protocol transparency.

"Liquidity and network effects matter more than transparency"

An agent managing capital will prioritize protocols where liquidity is deep and execution is reliable, regardless of the assumption surface. Established protocols like Aave dominate their categories because of compounding network effects, battle-tested security, and deep integrations. These moats are real and will persist.

The argument isn't that transparency will displace liquidity as the primary factor. It's that transparency will become an increasingly weighted variable as the share of agent-driven activity grows. If agents systematically achieve better risk-adjusted outcomes on protocols where their behavioral models are more complete (which follows from the analysis above), capital will migrate toward those protocols over time. The moat isn't liquidity alone but rather liquidity combined with model completeness.

"Most DeFi is already open source"

At the smart contract level, this is true for many established protocols (at least on Ethereum). But the whole point is that contract-level openness is insufficient. The operational stack of a DeFi protocol extends far beyond its smart contracts, and it is in these additional layers (like execution, risk management, oracle implementation or application logic) that opacity exists even in protocols that describe themselves as open source.

Toward an agent-readability standard

The assumption surface framework suggests a set of concrete properties that minimize opacity across each layer. Protocols with these properties are, in the terminology of this analysis, agent-readable: their behavior can be fully modeled by an autonomous system without reliance on unverifiable assumptions.

In practice, an agent-readable protocol would have verified and well-documented smart contracts, complete event emission for all economically relevant state changes, transparent and auditable oracle implementations with documented methodology and failure modes, public governance processes with advance notice for parameter changes (including timelock mechanisms), open or formally specified execution logic (including any offchain components whose behavior affects user outcomes) and clean, documented APIs.

This is obviously a spectrum and not a binary. No protocol will achieve a zero assumption surface across every dimension, but making the spectrum explicit gives protocol teams a design target, while offering agent builders an evaluation framework and capital allocators a risk structure that current DeFi analysis mostly ignores.

The assumption surface represents an underexplored axis of protocol quality. DeFi evaluation today focuses mostly on TVL, volume, security audit status, and governance structure. As agent-driven participation grows, the observability of a protocol's behavior (how complete the information surface available to autonomous systems actually is) will become a consideration alongside these traditional metrics that people can't afford to ignore.

The convergence of AI and crypto is still early. As we argued in our previous research, much of the current landscape consists of generic prediction models applied to complex systems. The protocols that will serve as the foundation for the next generation of protocol-specific AI are the ones that treat observability as a design principle.

Subscribe to our newsletter

By submitting you email, you confirm that you accept our Privacy Policy

Subscribe to our newsletter

By submitting you email, you confirm that you accept our Privacy Policy

Subscribe to our newsletter

By submitting you email, you confirm that you accept our Privacy Policy

Latest articles

AI x Crypto

Feb 17, 2026

The Assumption Surface: Why Agent-Driven Capital Favors Transparent Protocols

AI x Crypto

Feb 17, 2026

The Assumption Surface: Why Agent-Driven Capital Favors Transparent Protocols

AI x Crypto

Feb 17, 2026

The Assumption Surface: Why Agent-Driven Capital Favors Transparent Protocols

AI x Crypto

Feb 6, 2026

AI x Crypto: The Case for Protocol-Specific Intelligence

AI x Crypto

Feb 6, 2026

AI x Crypto: The Case for Protocol-Specific Intelligence

AI x Crypto

Feb 6, 2026

AI x Crypto: The Case for Protocol-Specific Intelligence

DeFi

Feb 5, 2026

Gas Optimization in DeFi: Tools, Techniques, and Lessons from Onchain Perps

DeFi

Feb 5, 2026

Gas Optimization in DeFi: Tools, Techniques, and Lessons from Onchain Perps

DeFi

Feb 5, 2026

Gas Optimization in DeFi: Tools, Techniques, and Lessons from Onchain Perps

Updates

Feb 4, 2026

Riva Labs: a research-first approach to crypto technology

Updates

Feb 4, 2026

Riva Labs: a research-first approach to crypto technology

Updates

Feb 4, 2026